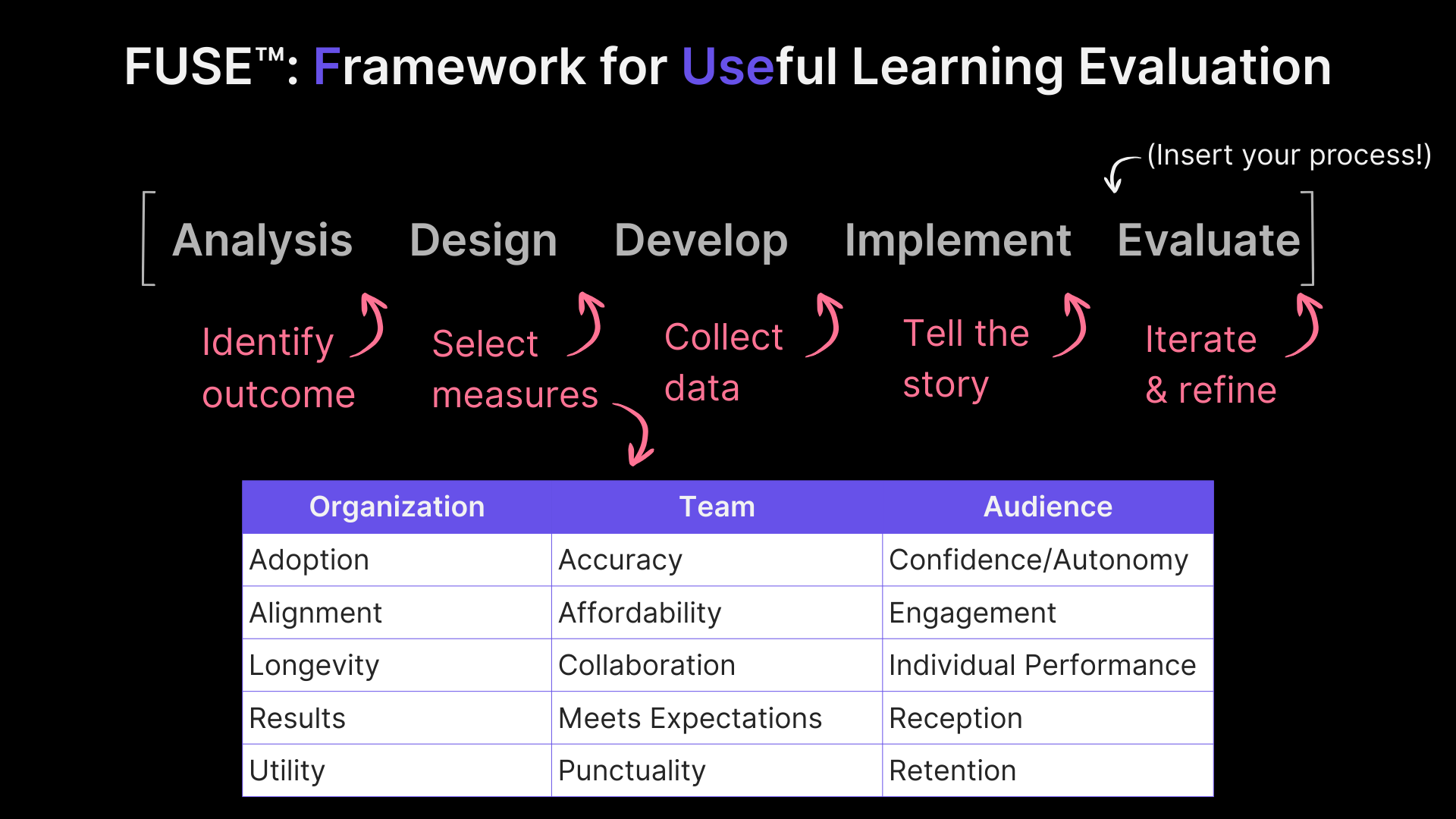

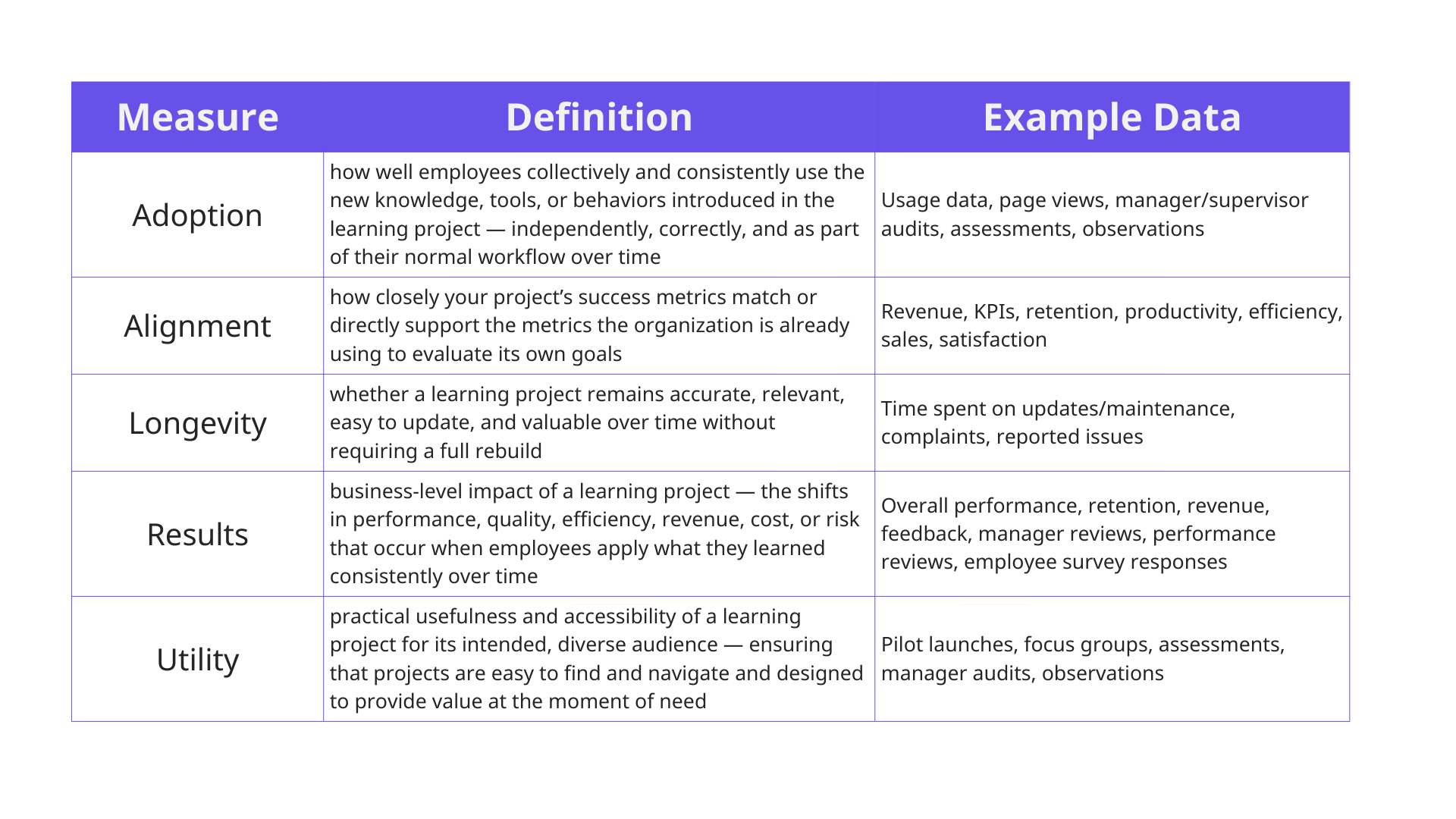

The measures are probably the most important and complex part of the framework.

Here's some important things you need to know before diving in:

Select each tab below for specific definitions of each of the measures under each bucket. These will help you better understand what to choose for real projects.

.png)

.png)